Running LLama 3 LLM with vLLM Library at Scale

Large Language Models (LLMs) have revolutionized the field of artificial intelligence, enabling machines to understand and generate human-like text. Meta’s LLama 3 is one of the latest advancements in this domain, offering improved accuracy and cost-efficiency. This article will guide you through the process of running LLama 3 using the vLLM library, which is designed for efficient LLM inference and deployment at scale.

Hugging Face Llama3 Model link here

Why Choose vLLM?

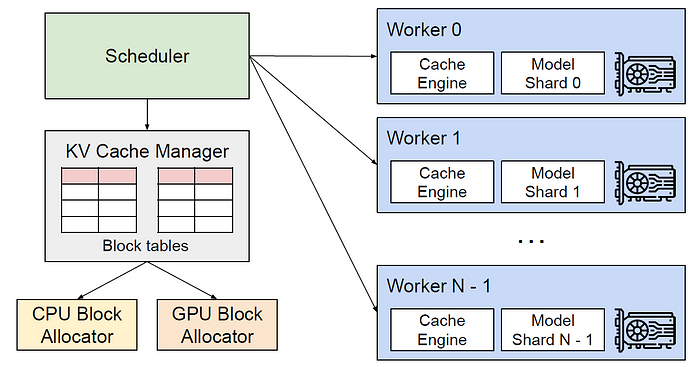

vLLM stands for Virtual Large Language Model and is an open-source library developed to optimize memory management and inference speed for large language models. It leverages advanced techniques such as PagedAttention and continuous batching to handle high-throughput and memory-constrained workloads efficiently.

Setting Up vLLM

Prerequisites

Before you begin, ensure you have the following:

- A server with a compatible GPU (e.g., NVIDIA A10, A100, or AMD Mi300).

- Python installed on your system.

- Access to the internet for downloading the model and dependencies.

First, install the vLLM library using pip:

pip install vllmIf you plan to perform distributed inference, you will also need to install Ray:

pip install rayLoading and Running LLama 3

To load the LLama 3 model into vLLM, use the following Python code:

from vllm import LLM

# Load the LLama 3 8B model

model = LLM("meta-llama/Meta-Llama-3-8B-Instruct")You can run inference using the model as shown below:

from transformers import AutoTokenizer

# Load the tokenizer

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Meta-Llama-3-8B-Instruct")

# Prepare the input message

messages = [{"role": "user", "content": "What is the capital of France?"}]

formatted_prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

# Generate the output

output = model.generate(formatted_prompt)

print(output)Deploying at Scale

For consistent deployment across diverse environments, you can use pre-built container images. This method supports both on-premises and cloud-based deployments.

docker pull vllm/vllm:latest

docker run -d -p 8000:8000 vllm/vllm:latestvLLM provides a RESTful API for easy integration with other systems. Start the API server with the following command:

python -m vllm.entrypoints.api_server --model meta-llama/Meta-Llama-3-8B-InstructYou can then interact with the model via HTTP endpoints:

curl http://localhost:8000/generate -d '{ "prompt": "Dell is", "use_beam_search": true, "n": 5, "temperature": 0 }'Distributed Inference

For large-scale deployments, you can use Ray to distribute the inference workload across multiple GPUs:

from vllm.engine.arg_utils import AsyncEngineArgs

from vllm.engine.async_llm_engine import AsyncLLMEngine

# Define the engine arguments

args = AsyncEngineArgs(

model="meta-llama/Meta-Llama-3-8B-Instruct",

tensor_parallel_size=8, # Number of GPUs

trust_remote_code=True,

enforce_eager=True

)

# Initialize the engine

engine = AsyncLLMEngine.from_engine_args(args)

# Run inference

async def generate(prompt: str, **sampling_params):

from vllm.sampling_params import SamplingParams

from vllm.utils import random_uuid

sampling_params = SamplingParams(**sampling_params)

request_id = random_uuid()

results_generator = engine.generate(prompt, sampling_params, request_id)

return results_generatorConclusion

Running LLama 3 with the vLLM library offers a powerful and efficient solution for deploying large language models at scale. By leveraging advanced techniques like PagedAttention and continuous batching, vLLM ensures high throughput and optimal memory usage. Whether you are deploying on-premises or in the cloud, vLLM provides the flexibility and performance needed to handle demanding AI applications.

If you want to connect with me on LinkedIn connect here, on twitter connect here.